November 8, 2016, 9:30 am

Today the CNCF is pleased to launch a new training, certification and Kubernetes Managed Service Provider (KMSP) program. The goal of the program is to ensure enterprises get the support they’re looking for to get up to speed and roll out new applications more quickly and more efficiently. The Linux Foundation, in partnership with CNCF, will develop and operate the Kubernetes training and certification.

Interested in this course? Sign up here to pre-register. The course, expected to be available in early 2017, is open now at the discounted price of $99 (regularly $199) for a limited time, and the certification program is expected to be available in the second quarter of 2017. The KMSP program is a pre-qualified tier of highly vetted service providers who have deep experience helping enterprises successfully adopt Kubernetes. The KMSP partners offer SLA-backed Kubernetes support, consulting, professional services and training for organizations embarking on their Kubernetes journey. In contrast to the Kubernetes Service Partners program outlined recently in this blog, to become a Kubernetes Managed Service Provider the following additional requirements must be met: three or more certified engineers, an active contributor to Kubernetes, and a business model to support enterprise end users. As part of the program, a new CNCF Certification Working Group is starting up now. The group will help define the program's open source curriculum, which will be available under the Creative Commons By Attribution 4.0 International license for anyone to use. Any Kubernetes expert can join the working group via this link. Google has committed to assist, and many others, including Apprenda, Container Solutions, CoreOS, Deis and Samsung SDS, have expressed interest in participating in the Working Group.To learn more about the new program and the first round of KMSP partners that we expect to grow weekly, check out today's announcement here.

↧

November 17, 2016, 3:55 pm

In Kubernetes 1.4, we introduced a new node performance analysis tool, called the node performance dashboard, to visualize and explore the behavior of the Kubelet in much richer details. This new feature will make it easy to understand and improve code performance for Kubelet developers, and lets cluster maintainer set configuration according to provided Service Level Objectives (SLOs).Background

A Kubernetes cluster is made up of both master and worker nodes. The master node manages the cluster’s state, and the worker nodes do the actual work of running and managing pods. To do so, on each worker node, a binary, called Kubelet, watches for any changes in pod configuration, and takes corresponding actions to make sure that containers run successfully. High performance of the Kubelet, such as low latency to converge with new pod configuration and efficient housekeeping with low resource usage, is essential for the entire Kubernetes cluster. To measure this performance, Kubernetes uses end-to-end (e2e) tests to continuously monitor benchmark changes of latest builds with new features.

Kubernetes SLOs are defined by the following benchmarks:

* API responsiveness: 99% of all API calls return in less than 1s.* Pod startup time: 99% of pods and their containers (with pre-pulled images) start within 5s.

Prior to 1.4 release, we’ve only measured and defined these at the cluster level, opening up the risk that other factors could influence the results. Beyond these, we also want to have more performance related SLOs such as the maximum number of pods for a specific machine type allowing maximum utilization of your cluster. In order to do the measurement correctly, we want to introduce a set of tests isolated to just a node’s performance. In addition, we aim to collect more fine-grained resource usage and operation tracing data of Kubelet from the new tests.Data Collection

The node specific density and resource usage tests are now added into e2e-node test set since 1.4. The resource usage is measured by a standalone cAdvisor pod for flexible monitoring interval (comparing with Kubelet integrated cAdvisor). The performance data, such as latency and resource usage percentile, are recorded in persistent test result logs. The tests also record time series data such as creation time, running time of pods, as well as real-time resource usage. Tracing data of Kubelet operations are recorded in its log stored together with test results.Node Performance Dashboard

Since Kubernetes 1.4, we are continuously building the newest Kubelet code and running node performance tests. The data is collected by our new performance dashboard available at node-perf-dash.k8s.io. Figure 1 gives a preview of the dashboard. You can start to explore it by selecting a test, either using the drop-down list of short test names (region (a)) or by choosing test options one by one (region (b)). The test details show up in region (c) containing the full test name from Ginkgo (the Go test framework used by Kubernetes). Then select a node type (image and machine) in region (d).![]() |

| Figure 1. Select a test to display in node performance dashboard. |

The "BUILDS" page exhibits the performance data across different builds (Figure 2). The plots include pod startup latency, pod creation throughput, and CPU/memory usage of Kubelet and runtime (currently Docker). In this way it’s easy to monitor the performance change over time as new features are checked in.

![]() |

| Figure 2. Performance data across different builds. |

Compare Different Node ConfigurationsIt’s always interesting to compare the performance between different configurations, such as comparing startup latency of different machine types, different numbers of pods, or comparing resource usage of hosting different number of pods. The dashboard provides a convenient way to do this. Just click the "Compare it" button the right up corner of test selection menu (region (e) in Figure 1). The selected tests will be added to a comparison list in the "COMPARISON" page, as shown in Figure 3. Data across a series of builds are aggregated to a single value to facilitate comparison and are displayed in bar charts.

![]() |

| Figure 3. Compare different test configurations. |

Time Series and Tracing: Diving Into Performance Data

Pod startup latency is an important metric for Kubelet, especially when creating a large number of pods per node. Using the dashboard you can see the change of latency, for example, when creating 105 pods, as shown in Figure 4. When you see the highly variable lines, you might expect that the variance is due to different builds. However, as these test here were run against the same Kubernetes code, we can conclude the variance is due to performance fluctuation. The variance is close to 40s when we compare the 99% latency of build #162 and #173, which is very large. To drill into the source of the fluctuation, let’s check out the "TIME SERIES" page.

![]() |

| Figure 4. Pod startup latency when creating 105 pods. |

Looking specifically at build #162, we are able to see that the tracing data plotted in the pod creation latency chart (Figure 5). Each curve is an accumulated histogram of the number of pod operations which have already arrive at a certain tracing probe. The timestamp of tracing pod is either collected from the performance tests or by parsing the Kubelet log. Currently we collect the following tracing data:

- "create" (in test): the test creates pods through API client;

- "running" (in test): the test watches that pods are running from API server;

- "pod_config_change": pod config change detected by Kubelet SyncLoop;

- "runtime_manager": runtime manager starts to create containers;

- "infra_container_start": the infra container of a pod starts;

- "container_start': the container of a pod starts;

- "pod_running": a pod is running;

- "pod_status_running": status manager updates status for a running pod;

The time series chart illustrates that it is taking a long time for the status manager to update pod status (the data of "running" is not shown since it overlaps with "pod_status_running"). We figure out this latency is introduced due to the query per second (QPS) limits of Kubelet to the API server (default is 5). After being aware of this, we find in additional tests that by increasing QPS limits, curve "running" gradually converges with "pod_running', and results in much lower latency. Therefore the previous e2e test pod startup results reflect the combined latency of both Kubelet and time of uploading status, the performance of Kubelet is thus under-estimated.

![]() |

| Figure 5. Time series page using data from build #162. |

Further, by comparing the time series data of build #162 (Figure 5) and build #173 (Figure 6), we find that the performance pod startup latency fluctuation actually happens during updating pod statuses. Build #162 has several straggler "pod_status_running" events with a long latency tails. It thus provides useful ideas for future optimization.

![]() |

| Figure 6. Pod startup latency of build #173. |

In future we plan to use events in Kubernetes which has a fixed log format to collect tracing data more conveniently. Instead of extracting existing log entries, then you can insert your own tracing probes inside Kubelet and obtain the break-down latency of each segment.

You can check the latency between any two probes across different builds in the “TRACING” page, as shown in Figure 7. For example, by selecting "pod_config_change" as the start probe, and "pod_status_running' as the end probe, it gives the latency variance of Kubelet over continuous builds without status updating overhead. With this feature, developers are able to monitor the performance change of a specific part of code inside Kubelet.

![]() |

| Figure 7. Plotting latency between any two probes. |

Future Work

The

node performance dashboard is a brand new feature. It is still alpha version under active development. We will keep optimizing the data collecting and visualization, providing more tests, metrics and tools to the developers and the cluster maintainers.

Please join our community and help us build the future of Kubernetes! If you’re particularly interested in nodes or performance testing, participate by chatting with us in our

Slack channel or join our meeting which meets every Tuesday at 10 AM PT on this

SIG-Node Hangout.

--Zhou Fang, Software Engineering Intern, Google

↧

↧

November 18, 2016, 1:05 pm

Editor's note: Today’s post is by Stefan Thies, Developer Evangelist, at Sematext, showing key Kubernetes metrics and log elements to help you troubleshoot and tune Docker and Kubernetes.

Managing microservices in containers is typically done with Cluster Managers and Orchestration tools. Each container platform has a slightly different set of options to deploy containers or schedule tasks on each cluster node. Because we do container monitoring and logging at Sematext, part of our job is to share our knowledge of these tools, especially as it pertains to container observability and devops. Today we’ll show a tutorial for Container Monitoring and Log Collection on Kubernetes.Dynamic Deployments Require Dynamic MonitoringThe high level of automation for the container and microservice lifecycle makes the monitoring of Kubernetes more challenging than in more traditional, more static deployments. Any static setup to monitor specific application containers would not work because Kubernetes makes its own decisions according to the defined deployment rules. It is not only the deployed microservices that need to be monitored. It is equally important to watch metrics and logs for Kubernetes core services themselves, such as Kubernetes Master running etcd, controller-manager, scheduler and apiserver and Kubernetes Workers (fka minions) running kubelet and proxy service. Having a centralized place to keep an eye on all these services, their metrics and logs helps one spot problems in the cluster infrastructure. Kubernetes core services could be installed on bare metal, in virtual machines or as containers using Docker. Deploying Kubernetes core services in containers could be helpful with deployment and monitoring operations - tools for container monitoring would cover both core services and application containers. So how does one monitor such a complex and dynamic environment?Agent for Kubernetes Metrics and LogsThere are a number of open source docker monitoring and logging projects one can cobble together to build a monitoring and log collection system (or systems). The advantage is that the code is all free. The downside is that this takes times - both initially when setting it up and later when maintaining. That’s why we built Sematext Docker Agent - a modern, Docker-aware metrics, events, and log collection agent. It runs as a tiny container on every Docker host and collects logs, metrics and events for all cluster nodes and all containers. It discovers all containers (one pod might contain multiple containers) including containers for Kubernetes core services, if core services are deployed in Docker containers. Let’s see how to deploy this agent.Deploying Agent to all Kubernetes Nodes

Kubernetes provides DeamonSets, which ensure pods are added to nodes as nodes are added to the cluster. We can use this to easily deploy Sematext Agent to each cluster node!Configure Sematext Docker Agent for KubernetesLet’s assume you’ve created an SPM app for your Kubernetes metrics and events, and a Logsene app for your Kubernetes logs, each of which comes with its own token. The Sematext Docker Agent README lists all configurations (e.g. filter for specific pods/images/containers), but we’ll keep it simple here.- Grab the latest sematext-agent-daemonset.yml (raw plain-text) template (also shown below)

- Save it somewhere on disk

- Replace the SPM_TOKEN and LOGSENE_TOKEN placeholders with your SPM and Logsene App tokens

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: sematext-agent

spec:

template:

metadata:

labels:

app: sematext-agent

spec:

selector: {}

dnsPolicy: "ClusterFirst"

restartPolicy: "Always"

containers:

- name: sematext-agent

image: sematext/sematext-agent-docker:latest

imagePullPolicy: "Always"

env:

- name: SPM_TOKEN

value: "REPLACE THIS WITH YOUR SPM TOKEN"

- name: LOGSENE_TOKEN

value: "REPLACE THIS WITH YOUR LOGSENE TOKEN"

- name: KUBERNETES

value: "1"

volumeMounts:

- mountPath: /var/run/docker.sock

name: docker-sock

- mountPath: /etc/localtime

name: localtime

volumes:

- name: docker-sock

hostPath:

path: /var/run/docker.sock

- name: localtime

hostPath:

path: /etc/localtime |

Run Agent as DaemonSet

Activate Sematext Agent Docker with kubectl:

> kubectl create -f sematext-agent-daemonset.yml daemonset "sematext-agent-daemonset" created |

Now let’s check if the agent got deployed to all nodes:

> kubectl get pods NAME READY STATUS RESTARTS AGE sematext-agent-nh4ez 0/1 ContainerCreating 0 6s sematext-agent-s47vz 0/1 ImageNotReady 0 6s |

The status “ImageNotReady” or “ContainerCreating” might be visible for a short time because Kubernetes must download the image for sematext/sematext-agent-docker first. The setting imagePullPolicy: "Always" specified in sematext-agent-daemonset.yml makes sure that Sematext Agent gets updated automatically using the image from Docker-Hub.

If we check again we’ll see Sematext Docker Agent got deployed to (all) cluster nodes:

> kubectl get pods -l sematext-agent NAME READY STATUS RESTARTS AGE sematext-agent-nh4ez 1/1 Running 0 8s sematext-agent-s47vz 1/1 Running 0 8s |

Less than a minute after the deployment you should see your Kubernetes metrics and logs! Below are screenshots of various out of the box reports and explanations of various metrics’ meanings.

Interpretation of Kubernetes Metrics

The metrics from all Kubernetes nodes are collected in a single SPM App, which aggregates metrics on several levels:

- Cluster - metrics aggregated over all nodes displayed in SPM overview

- Host / node level - metrics aggregated per node

- Docker Image level - metrics aggregated by image name, e.g. all nginx webserver containers

- Docker Container level - metrics aggregated for a single container

![]() |

| Host and Container Metrics from the Kubernetes Cluster |

Each detailed chart has filter options for Node, Docker Image, and Docker Container. As Kubernetes uses the pod name in the name of the Docker Containers a search by pod name in the Docker Container filter makes it easy to select all containers for a specific pod.

Let’s have a look at a few Kubernetes (and Docker) key metrics provided by SPM.

Host Metrics such as CPU, Memory and Disk space usage. Docker images and containers consume more disk space than regular processes installed on a host. For example, an application image might include a Linux operating system and might have a size of 150-700 MB depending on the size of the base image and installed tools in the container. Data containers consume disk space on the host as well. In our experience watching the disk space and using cleanup tools is essential for continuous operations of Docker hosts.

Container count - represents the number of running containers per host

![]() |

| Container Counters per Kubernetes Node over time |

Container Memory and Memory Fail Counters. These metrics are important to watch and very important to tune applications. Memory limits should fit the footprint of the deployed pod (application) to avoid situations where Kubernetes uses default limits (e.g. defined for a namespace), which could lead to OOM kills of containers. Memory fail counters reflect the number of failed memory allocations in a container, and in case of OOM kills a Docker Event is triggered. This event is then displayed in SPM because

Sematext Docker Agents collects all Docker Events. The best practice is to tune memory setting in a few iterations:

- Monitor memory usage of the application container

- Set memory limits according to the observations

- Continue monitoring of memory, memory fail counters, and Out-Of-Memory events. If OOM events happen, the container memory limits may need to be increased, or debugging is required to find the reason for the high memory consumptions.

![]() |

| Container memory usage, limits and fail counters |

Container CPU usage and throttled CPU time. The CPU usage can be limited by CPU shares - unlike memory, CPU usage it is not a hard limit. Containers might use more CPU as long the resource is available, but in situations where other containers need the CPU limits apply and the CPU gets throttled to the limit.

![]()

There are more

docker metrics to watch, like disk I/O throughput, network throughput and network errors for containers, but let’s continue by looking at Kubernetes Logs next.

Understand Kubernetes Logs

Kubernetes containers’ logs are not much different from Docker container logs. However, Kubernetes users need to view logs for the deployed pods. That’s why it is very useful to have Kubernetes-specific information available for log search, such as:

- Kubernetes name space

- Kubernetes pod name

- Kubernetes container name

- Docker image name

- Kubernetes UID

Sematext Docker Agent extracts this information from the Docker container names and tags all logs with the information mentioned above. Having these data extracted in individual fields makes it is very easy to watch logs of deployed pods, build reports from logs, quickly narrow down to problematic pods while troubleshooting, and so on! If Kubernetes core components (such as kubelet, proxy, api server) are deployed via Docker the Sematext Docker Agent will collect Kubernetes core components logs as well.

![]() |

| All logs from Kubernetes containers in Logsene |

There are many other useful features Logsene and Sematext Docker Agent give you out of the box, such as:

- Automatic format detection and parsing of logs

- Sematext Docker Agent includes patterns to recognize and parse many log formats

- Custom pattern definitions for specific images and application types

- Automatic Geo-IP enrichment for container logs

- Filtering logs e.g. to exclude noisy services

- Masking of sensitive data in specific log fields (phone numbers, payment information, authentication tokens)

- Alerts and scheduled reports based on logs

- Analytics for structured logs e.g. in Kibana or Grafana

--Stefan Thies, Developer Evangelist, at Sematext

↧

November 22, 2016, 7:20 am

Editor's note: Today’s post is by Sebastien Goasguen, Founder of Skippbox, showing a new tool to move from ‘docker-compose’ to Kubernetes.

At Skippbox, we developed kompose a tool to automatically transform your Docker Compose application into Kubernetes manifests. Allowing you to start a Compose application on a Kubernetes cluster with a single kompose up command. We’re extremely happy to have donated kompose to the Kubernetes Incubator. So here’s a quick introduction about it and some motivating factors that got us to develop it.Docker is terrific for developers. It allows everyone to get started quickly with an application that has been packaged in a Docker image and is available on a Docker registry. To build a multi-container application, Docker has developed Docker-compose (aka Compose). Compose takes in a yaml based manifest of your multi-container application and starts all the required containers with a single command docker-compose up. However Compose only works locally or with a Docker Swarm cluster.

But what if you wanted to use something else than Swarm? Like Kubernetes of course.The Compose format is not a standard for defining distributed applications. Hence you are left re-writing your application manifests in your container orchestrator of choice.

We see kompose as a terrific way to expose Kubernetes principles to Docker users as well as to easily migrate from Docker Swarm to Kubernetes to operate your applications in production.Over the summer, Kompose has found a new gear with help from Tomas Kral and Suraj Deshmukh from Red Hat, and Janet Kuo from Google. Together with our own lead kompose developer Nguyen An-Tu they are making kompose even more exciting. We proposed Kompose to the Kubernetes Incubator within the SIG-apps and we received approval from the general Kubernetes community; you can now find kompose in the Kubernetes Incubator.Kompose now supports Docker-compose v2 format, persistent volume claims have been added recently, as well as multiple container per pods. It can also be used to target Openshift deployments, by specifying a different provider than the default Kubernetes. Kompose is also now available in Fedora packages and we look forward to see it in CentOS distributions in the coming weeks.kompose is a single Golang binary that you build or install from the release on GitHub. Let’s skip the build instructions and dive straight into an example.Let's take it for a spin!Guestbook application with DockerThe Guestbook application has become the canonical example for Kubernetes. In Docker-compose format, the guestbook can be started with this minimal file:version: "2"

services: redis-master: image: gcr.io/google_containers/redis:e2e ports: - "6379" redis-slave: image: gcr.io/google_samples/gb-redisslave:v1 ports: - "6379" environment: - GET_HOSTS_FROM=dns frontend: image: gcr.io/google-samples/gb-frontend:v4 ports: - "80:80" environment: - GET_HOSTS_FROM=dns |

It consists of three services. A redis-master node, a set of redis-slave that can be scaled and find the redis-master via its DNS name. And a PHP frontend that exposes itself on port 80. The resulting application allows you to leave short messages which are stored in the redis cluster.To get it started with docker-compose on a vanilla Docker host do:$ docker-compose -f docker-guestbook.yml up -d Creating network "examples_default" with the default driver Creating examples_redis-slave_1 Creating examples_frontend_1 Creating examples_redis-master_1 |

So far so good, this is plain Docker usage. Now let’s see how to get this on Kubernetes without having to re-write anything.

Guestbook with 'kompose'

Kompose currently has three main commands up, down and convert. Here for simplicity we will show a single usage to bring up the Guestbook application.

Similarly to docker-compose, we can use the kompose up command pointing to the Docker-compose file representing the Guestbook application. Like so:

$ kompose -f ./examples/docker-guestbook.yml up We are going to create Kubernetes deployment and service for your dockerized application. If you need more kind of controllers, use 'kompose convert' and 'kubectl create -f' instead.

INFO[0000] Successfully created service: redis-master INFO[0000] Successfully created service: redis-slave INFO[0000] Successfully created service: frontend INFO[0000] Successfully created deployment: redis-master INFO[0000] Successfully created deployment: redis-slave INFO[0000] Successfully created deployment: frontend

Application has been deployed to Kubernetes. You can run 'kubectl get deployment,svc' for details. |

kompose automatically converted the Docker-compose file into Kubernetes objects. By default, it created one deployment and one service per compose services. In addition it automatically detected your current Kubernetes endpoint and created the resources onto it. A set of flags can be used to generate Replication Controllers, Replica Sets or Daemon Sets instead of Deployments.

And that's it! Nothing else to do, the conversion happened automatically.

Now, if you already now Kubernetes a bit, you’re familiar with the client kubectl and you can check what was created on your cluster.

$ kubectl get pods,svc,deployments NAME READY STATUS RESTARTS AGE frontend-3780173733-0ayyx 1/1 Running 0 1m redis-master-3028862641-8miqn 1/1 Running 0 1m redis-slave-3788432149-t3ejp 1/1 Running 0 1m NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE frontend 10.0.0.34 <none> 80/TCP 1m redis-master 10.0.0.219 <none> 6379/TCP 1m redis-slave 10.0.0.84 <none> 6379/TCP 1m NAME DESIRED CURRENT UP-TO-DATE

AVAILABLE AGE frontend 1 1 1 1 1m redis-master 1 1 1 1 1m redis-slave 1 1 1 1 1m |

Hopefully this gave you a quick tour of kompose and got you excited. They are more exciting features, like creating different type of resources, creating Helm charts and even using the experimental Docker bundle format as input. Check Lachlan Evenson’s blog on using a Docker bundle with Kubernetes. For an overall demo, see our talk from KubeCon

Head over to the Kubernetes Incubator and check out kompose, it will help you move easily from your Docker compose applications to Kubernetes clusters in production.

↧

December 8, 2016, 11:00 am

Editor's note: Today’s post is by Bernard Van De Walle, Kubernetes Lead Engineer, at Aporeto, showing how they took a new approach to the Kubernetes network policy enforcement.

Kubernetes Network Policies

Kubernetes supports a new API for network policies that provides a sophisticated model for isolating applications and reducing their attack surface. This feature, which came out of the SIG-Network group, makes it very easy and elegant to define network policies by using the built-in labels and selectors Kubernetes constructs.

Kubernetes has left it up to third parties to implement these network policies and does not provide a default implementation.

We want to introduce a new way to think about “Security” and “Network Policies”. We want to show that security and reachability are two different problems, and that security policies defined using endpoints (pods labels for example) do not specifically need to be implemented using network primitives.

Most of us at Aporeto come from a Network/SDN background, and we knew how to implement those policies by using traditional networking and firewalling: Translating the pods identity and policy definitions to network constraints, such as IP addresses, subnets, and so forth.

However, we also knew from past experiences that using an external control plane also introduces a whole new set of challenges: This distribution of ACLs requires very tight synchronization between Kubernetes workers; and every time a new pod is instantiated, ACLs need to be updated on all other pods that have some policy related to the new pod. Very tight synchronization is fundamentally a quadratic state problem and, while shared state mechanisms can work at a smaller scale, they often have convergence, security, and eventual consistency issues in large scale clusters.

From Network Policies to Security Policies

At Aporeto, we took a different approach to the network policy enforcement, by actually decoupling the network from the policy. We open sourced our solution as Trireme, which translates the network policy to an authorization policy, and it implements a transparent authentication and authorization function for any communication between pods. Instead of using IP addresses to identify pods, it defines a cryptographically signed identity for each pod as the set of its associated labels. Instead of using ACLs or packet filters to enforce policy, it uses an authorization function where a container can only receive traffic from containers with an identity that matches the policy requirements.

The authentication and authorization function in Trireme is overlaid on the TCP negotiation sequence. Identity (i.e. set of labels) is captured as a JSON Web Token (JWT), signed by local keys, and exchanged during the Syn/SynAck negotiation. The receiving worker validates that the JWTs are signed by a trusted authority (authentication step) and validates against a cached copy of the policy that the connection can be accepted. Once the connection is accepted, the rest of traffic flows through the Linux kernel and all of the protections that it can potentially offer (including conntrack capabilities if needed). The current implementation uses a simple user space process that captures the initial negotiation packets and attaches the authorization information as payload. The JWTs include nonces that are validated during the Ack packet and can defend against man-in-the-middle or replay attacks.

![]()

Kubernetes is unique in its ability to scale and provide an extensible security support for the deployment of containers and microservices. Trireme provides a simple, secure, and scalable mechanism for enforcing these policies. You can deploy and try Trireme on top of Kubernetes by using a provided Daemon Set. You'll need to modify some of the YAML parameters based on your cluster architecture. All the steps are described in detail in the deployment GitHub folder. The same folder contains an example 3-tier policy that you can use to test the traffic pattern.To learn more, download the code, and engage with the project, visit:- Trireme on GitHub

- Trireme for Kubernetes by Aporeto on GitHub

--Bernard Van De Walle, Kubernetes lead engineer, Aporeto

↧

↧

December 13, 2016, 9:00 am

Today we’re announcing the release of Kubernetes 1.5. This release follows close on the heels of KubeCon/CloundNativeCon, where users gathered to share how they’re running their applications on Kubernetes. Many of you expressed interest in running stateful applications in containers with the eventual goal of running all applications on Kubernetes. If you have been waiting to try running a distributed database on Kubernetes, or for ways to guarantee application disruption SLOs for stateful and stateless apps, this release has solutions for you.

StatefulSet and PodDisruptionBudget are moving to beta. Together these features provide an easier way to deploy and scale stateful applications, and make it possible to perform cluster operations like node upgrade without violating application disruption SLOs.

You will also find usability improvements throughout the release, starting with the kubectl command line interface you use so often. For those who have found it hard to set up a multi-cluster federation, a new command line tool called ‘kubefed’ is here to help. And a much requested multi-zone Highly Available (HA) master setup script has been added to kube-up.

Did you know the Kubernetes community is working to support Windows containers? If you have .NET developers, take a look at the work on Windows containers in this release. This work is in early stage alpha and we would love your feedback.

Lastly, for those interested in the internals of Kubernetes, 1.5 introduces Container Runtime Interface or CRI, which provides an internal API abstracting the container runtime from kubelet. This decoupling of the runtime gives users choice in selecting a runtime that best suits their needs. This release also introduces containerized node conformance tests that verify that the node software meets the minimum requirements to join a Kubernetes cluster.

What’s New

StatefulSet beta (formerly known as PetSet) allows workloads that require persistent identity or per-instance storage to be created, scaled, deleted and repaired on Kubernetes. You can use StatefulSets to ease the deployment of any stateful service, and tutorial examples are available in the repository. In order to ensure that there are never two pods with the same identity, the Kubernetes node controller no longer force deletes pods on unresponsive nodes. Instead, it waits until the old pod is confirmed dead in one of several ways: automatically when the kubelet reports back and confirms the old pod is terminated; automatically when a cluster-admin deletes the node; or when a database admin confirms it is safe to proceed by force deleting the old pod. Users are now warned if they try to force delete pods via the CLI. For users who will be migrating from PetSets to StatefulSets, please follow the upgrade guide.

PodDisruptionBudget beta is an API object that specifies the minimum number or minimum percentage of replicas of a collection of pods that must be up at any time. With PodDisruptionBudget, an application deployer can ensure that cluster operations that voluntarily evict pods will never take down so many simultaneously as to cause data loss, an outage, or an unacceptable service degradation. In Kubernetes 1.5 the “kubectl drain” command supports PodDisruptionBudget, allowing safe draining of nodes for maintenance activities, and it will soon also be used by node upgrade and cluster autoscaler (when removing nodes). This can be useful for a quorum based application to ensure the number of replicas running is never below the number needed for quorum, or for a web front end to ensure the number of replicas serving load never falls below a certain percentage.

Kubefed alpha is a new command line tool to help you manage federated clusters, making it easy to deploy new federation control planes and add or remove clusters from existing federations. Also new in cluster federation is the addition of ConfigMaps alpha and DaemonSets alpha and deployments alpha to the federation API allowing you to create, update and delete these objects across multiple clusters from a single endpoint.

HA Masters alpha provides the ability to create and delete clusters with highly available (replicated) masters on GCE using the kube-up/kube-down scripts. Allows setup of zone distributed HA masters, with at least one etcd replica per zone, at least one API server per zone, and master-elected components like scheduler and controller-manager distributed across zones.Windows server containers alpha provides initial support for Windows Server 2016 nodes and scheduling Windows Server Containers.

Container Runtime Interface (CRI) alpha introduces the v1 CRI API to allow pluggable container runtimes; an experimental docker-CRI integration is ready for testing and feedback.

Node conformance test beta is a containerized test framework that provides a system verification and functionality test for nodes. The test validates whether the node meets the minimum requirements for Kubernetes; a node that passes the tests is qualified to join a Kubernetes. Node conformance test is available at: gcr.io/google_containers/node-test:0.2 for users to verify node setup.

These are just some of the highlights in our last release for the year. For a complete list please visit the release notes.

AvailabilityKubernetes 1.5 is available for download here on GitHub and via get.k8s.io. To get started with Kubernetes, try one of the new interactive tutorials. Don’t forget to take 1.5 for a spin before the holidays!

User AdoptionIt’s been a year-and-a-half since GA, and the rate of Kubernetes user adoption continues to surpass estimates. Organizations running production workloads on Kubernetes include the world's largest companies, young startups, and everything in between. Since Kubernetes is open and runs anywhere, we’ve seen adoption on a diverse set of platforms; Pokémon Go (Google Cloud), Ticketmaster (AWS), SAP (OpenStack), Box (bare-metal), and hybrid environments that mix-and-match the above. Here are a few user highlights:- Yahoo! JAPAN -- built an automated tool chain making it easy to go from code push to deployment, all while running OpenStack on Kubernetes.

- Walmart -- will use Kubernetes with OneOps to manage its incredible distribution centers, helping its team with speed of delivery, systems uptime and asset utilization.

- Monzo -- a European startup building a mobile first bank, is using Kubernetes to power its core platform that can handle extreme performance and consistency requirements.

Kubernetes EcosystemThe Kubernetes ecosystem is growing rapidly, including Microsoft's support for Kubernetes in Azure Container Service, VMware's integration of Kubernetes in its Photon Platform, and Canonical’s commercial support for Kubernetes. This is in addition to the thirty plus Technology & Service Partners that already provide commercial services for Kubernetes users.

The CNCF recently announced the Kubernetes Managed Service Provider (KMSP) program, a pre-qualified tier of service providers with experience helping enterprises successfully adopt Kubernetes. Furthering the knowledge and awareness of Kubernetes, The Linux Foundation, in partnership with CNCF, will develop and operate the Kubernetes training and certification program -- the first course designed is Kubernetes Fundamentals.

Community VelocityIn the past three months we’ve seen more than a hundred new contributors join the project with some 5,000 commits pushed, reaching new milestones by bringing the total for the core project to 1,000+ contributors and 40,000+ commits. This incredible momentum is only possible by having an open design, being open to new ideas, and empowering an open community to be welcoming to new and senior contributors alike. A big thanks goes out to the release team for 1.5 -- Saad Ali of Google, Davanum Srinivas of Mirantis, and Caleb Miles of CoreOS for their work bringing the 1.5 release to light.

Offline, the community can be found at one of the many Kubernetes related meetups around the world. The strength and scale of the community was visible in the crowded halls of CloudNativeCon/KubeCon Seattle (the recorded user talks are here). The next CloudNativeCon + KubeCon is in Berlin March 29-30, 2017, be sure to get your ticket and submit your talk before the CFP deadline of Dec 16th.

Ready to start contributing? Share your voice at our weekly community meeting. Thank you for your contributions and support!-- Aparna Sinha, Senior Product Manager, Google

↧

December 19, 2016, 2:35 pm

With the help of our growing community of 1,000 contributors, we pushed some 5,000 commits to extend support for production workloads and deliver Kubernetes 1.5. While many improvements and new features have been added, we selected few to highlight in a series of in-depths posts listed below.

This progress is our commitment in continuing to make Kubernetes best way to manage your production workloads at scale.Day 1 | |

Day 2 | |

Day 3 | |

Day 4 | |

Day 5 | |

Connect

Get involved with the Kubernetes project on GitHub Connect with the community on Slack

↧

December 19, 2016, 2:36 pm

At the lowest layers of a Kubernetes node is the software that, among other things, starts and stops containers. We call this the “Container Runtime”. The most widely known container runtime is Docker, but it is not alone in this space. In fact, the container runtime space has been rapidly evolving. As part of the effort to make Kubernetes more extensible, we've been working on a new plugin API for container runtimes in Kubernetes, called "CRI".

What is the CRI and why does Kubernetes need it?

Each container runtime has it own strengths, and many users have asked for Kubernetes to support more runtimes. In the Kubernetes 1.5 release, we are proud to introduce the Container Runtime Interface (CRI) -- a plugin interface which enables kubelet to use a wide variety of container runtimes, without the need to recompile. CRI consists of a protocol buffers and gRPC API, and libraries, with additional specifications and tools under active development. CRI is being released as Alpha in Kubernetes 1.5.

Supporting interchangeable container runtimes is not a new concept in Kubernetes. In the 1.3 release, we announced the rktnetes project to enable rkt container engine as an alternative to the Docker container runtime. However, both Docker and rkt were integrated directly and deeply into the kubelet source code through an internal and volatile interface. Such an integration process requires a deep understanding of Kubelet internals and incurs significant maintenance overhead to the Kubernetes community. These factors form high barriers to entry for nascent container runtimes. By providing a clearly-defined abstraction layer, we eliminate the barriers and allow developers to focus on building their container runtimes. This is a small, yet important step towards truly enabling pluggable container runtimes and building a healthier ecosystem.

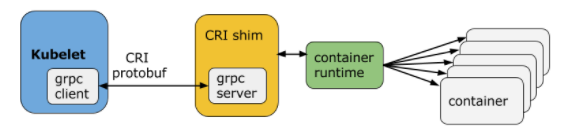

Overview of CRIKubelet communicates with the container runtime (or a CRI shim for the runtime) over Unix sockets using the gRPC framework, where kubelet acts as a client and the CRI shim as the server.

The protocol buffers API includes two gRPC services, ImageService, and RuntimeService. The ImageService provides RPCs to pull an image from a repository, inspect, and remove an image. The RuntimeService contains RPCs to manage the lifecycle of the pods and containers, as well as calls to interact with containers (exec/attach/port-forward). A monolithic container runtime that manages both images and containers (e.g., Docker and rkt) can provide both services simultaneously with a single socket. The sockets can be set in Kubelet by --container-runtime-endpoint and --image-service-endpoint flags.

Pod and container lifecycle managementservice RuntimeService { // Sandbox operations. rpc RunPodSandbox(RunPodSandboxRequest) returns (RunPodSandboxResponse) {}

rpc StopPodSandbox(StopPodSandboxRequest) returns (StopPodSandboxResponse) {}

rpc RemovePodSandbox(RemovePodSandboxRequest) returns (RemovePodSandboxResponse) {}

rpc PodSandboxStatus(PodSandboxStatusRequest) returns (PodSandboxStatusResponse) {}

rpc ListPodSandbox(ListPodSandboxRequest) returns (ListPodSandboxResponse) {}

// Container operations.

rpc CreateContainer(CreateContainerRequest) returns (CreateContainerResponse) {}

rpc StartContainer(StartContainerRequest) returns (StartContainerResponse) {}

rpc StopContainer(StopContainerRequest) returns (StopContainerResponse) {}

rpc RemoveContainer(RemoveContainerRequest) returns (RemoveContainerResponse) {}

rpc ListContainers(ListContainersRequest) returns (ListContainersResponse) {}

rpc ContainerStatus(ContainerStatusRequest) returns (ContainerStatusResponse) {} ...

} |

A Pod is composed of a group of application containers in an isolated environment with resource constraints. In CRI, this environment is called PodSandbox. We intentionally leave some room for the container runtimes to interpret the PodSandbox differently based on how they operate internally. For hypervisor-based runtimes, PodSandbox might represent a virtual machine. For others, such as Docker, it might be Linux namespaces. The PodSandbox must respect the pod resources specifications. In the v1alpha1 API, this is achieved by launching all the processes within the pod-level cgroup that kubelet creates and passes to the runtime.

Before starting a pod, kubelet calls RuntimeService.RunPodSandbox to create the environment. This includes setting up networking for a pod (e.g., allocating an IP). Once the PodSandbox is active, individual containers can be created/started/stopped/removed independently. To delete the pod, kubelet will stop and remove containers before stopping and removing the PodSandbox.

Kubelet is responsible for managing the lifecycles of the containers through the RPCs, exercising the container lifecycles hooks and liveness/readiness checks, while adhering to the restart policy of the pod.

Why an imperative container-centric interface?

Kubernetes has a declarative API with a Pod resource. One possible design we considered was for CRI to reuse the declarative Pod object in its abstraction, giving the container runtime freedom to implement and exercise its own control logic to achieve the desired state. This would have greatly simplified the API and allowed CRI to work with a wider spectrum of runtimes. We discussed this approach early in the design phase and decided against it for several reasons. First, there are many Pod-level features and specific mechanisms (e.g., the crash-loop backoff logic) in kubelet that would be a significant burden for all runtimes to reimplement. Second, and more importantly, the Pod specification was (and is) still evolving rapidly. Many of the new features (e.g., init containers) would not require any changes to the underlying container runtimes, as long as the kubelet manages containers directly. CRI adopts an imperative container-level interface so that runtimes can share these common features for better development velocity. This doesn't mean we're deviating from the "level triggered" philosophy - kubelet is responsible for ensuring that the actual state is driven towards the declared state.

Exec/attach/port-forward requestsservice RuntimeService { ... // ExecSync runs a command in a container synchronously.

rpc ExecSync(ExecSyncRequest) returns (ExecSyncResponse) {}

// Exec prepares a streaming endpoint to execute a command in the container.

rpc Exec(ExecRequest) returns (ExecResponse) {}

// Attach prepares a streaming endpoint to attach to a running container.

rpc Attach(AttachRequest) returns (AttachResponse) {}

// PortForward prepares a streaming endpoint to forward ports from a PodSandbox.

rpc PortForward(PortForwardRequest) returns (PortForwardResponse) {} ...

} |

Kubernetes provides features (e.g. kubectl exec/attach/port-forward) for users to interact with a pod and the containers in it. Kubelet today supports these features either by invoking the container runtime’s native method calls or by using the tools available on the node (e.g., nsenter and socat). Using tools on the node is not a portable solution because most tools assume the pod is isolated using Linux namespaces. In CRI, we explicitly define these calls in the API to allow runtime-specific implementations.

Another potential issue with the kubelet implementation today is that kubelet handles the connection of all streaming requests, so it can become a bottleneck for the network traffic on the node. When designing CRI, we incorporated this feedback to allow runtimes to eliminate the middleman. The container runtime can start a separate streaming server upon request (and can potentially account the resource usage to the pod!), and return the location of the server to kubelet. Kubelet then returns this information to the Kubernetes API server, which opens a streaming connection directly to the runtime-provided server and connects it to the client.

There are many other aspects of CRI that are not covered in this blog post. Please see the list of design docs and proposals for all the details.

Current status

Although CRI is still in its early stages, there are already several projects under development to integrate container runtimes using CRI. Below are a few examples:

If you are interested in trying these alternative runtimes, you can follow the individual repositories for the latest progress and instructions.

For developers interested in integrating a new container runtime, please see the developer guide for the known limitations and issues of the API. We are actively incorporating feedback from early developers to improve the API. Developers should expect occasional API breaking changes (it is Alpha, after all).

Try the new CRI-Docker integration

Kubelet does not yet use CRI by default, but we are actively working on making this happen. The first step is to re-integrate Docker with kubelet using CRI. In the 1.5 release, we extended kubelet to support CRI, and also added a built-in CRI shim for Docker. This allows kubelet to start the gRPC server on Docker’s behalf. To try out the new kubelet-CRI-Docker integration, you simply have to start the Kubernetes API server with --feature-gates=StreamingProxyRedirects=true to enable the new streaming redirect feature, and then start the kubelet with --experimental-cri=true.

Besides a few missing features, the new integration has consistently passed the main end-to-end tests. We plan to expand the test coverage soon and would like to encourage the community to report any issues to help with the transition.

CRI with Minikube

If you want to try out the new integration, but don’t have the time to spin up a new test cluster in the cloud yet, minikube is a great tool to quickly spin up a local cluster. Before you start, follow the instructions to download and install minikube.

1. Check the available Kubernetes versions and pick the latest 1.5.x version available. We will use v1.5.0-beta.1 as an example.

$ minikube get-k8s-versions |

2. Start a minikube cluster with the built-in docker CRI integration.

$ minikube start --kubernetes-version=v1.5.0-beta.1 --extra-config=kubelet.EnableCRI=true --network-plugin=kubenet --extra-config=kubelet.PodCIDR=10.180.1.0/24 --iso-url=http://storage.googleapis.com/minikube/iso/buildroot/minikube-v0.0.6.iso |

--extra-config=kubelet.EnableCRI=true` turns on the CRI implementation in kubelet. --network-plugin=kubenet and --extra-config=kubelet.PodCIDR=10.180.1.0/24 sets the network plugin to kubenet and ensures a PodCIDR is assigned to the node. Alternatively, you can use the cni plugin which does not rely on the PodCIDR. --iso-url sets an iso image for minikube to launch the node with. The image used in the example

3. Check the minikube log to check that CRI is enabled.

$ minikube logs | grep EnableCRI I1209 01:48:51.150789 3226 localkube.go:116] Setting EnableCRI to true on kubelet. |

4. Create a pod and check its status. You should see a “SandboxReceived” event as a proof that Kubelet is using CRI!

$ kubectl run foo --image=gcr.io/google_containers/pause-amd64:3.0 deployment "foo" created $ kubectl describe pod foo ... ... From Type Reason Message

... ----------------- ----- --------------- ----------------------------- ...{default-scheduler } Normal Scheduled Successfully assigned foo-141968229-v1op9 to minikube

...{kubelet minikube} Normal SandboxReceived Pod sandbox received, it will be created. ... |

Community

CRI is being actively developed and maintained by the Kubernetes SIG-Node community. We’d love to hear feedback from you. To join the community:

Post issues or feature requests on GitHub Join the #sig-node channel on Slack

--Yu-Ju Hong, Software Engineer, Google

↧

December 20, 2016, 1:41 pm

Editor’s note: this post is part of a series of in-depth articles on what's new in Kubernetes 1.5

In the latest release, Kubernetes 1.5, we’ve moved the feature formerly known as PetSet into beta as StatefulSet. There were no major changes to the API Object, other than the community selected name, but we added the semantics of “at most one pod per index” for deployment of the Pods in the set. Along with ordered deployment, ordered termination, unique network names, and persistent stable storage, we think we have the right primitives to support many containerized stateful workloads. We don’t claim that the feature is 100% complete (it is software after all), but we believe that it is useful in its current form, and that we can extend the API in a backwards-compatible way as we progress toward an eventual GA release.

When is StatefulSet the Right Choice for my Storage Application?

Deployments and ReplicaSets are a great way to run stateless replicas of an application on Kubernetes, but their semantics aren’t really right for deploying stateful applications. The purpose of StatefulSet is to provide a controller with the correct semantics for deploying a wide range of stateful workloads. However, moving your storage application onto Kubernetes isn’t always the correct choice. Before you go all in on converging your storage tier and your orchestration framework, you should ask yourself a few questions.

Can your application run using remote storage or does it require local storage media?

Currently, we recommend using StatefulSets with remote storage. Therefore, you must be ready to tolerate the performance implications of network attached storage. Even with storage optimized instances, you won’t likely realize the same performance as locally attached, solid state storage media. Does the performance of network attached storage, on your cloud, allow your storage application to meet its SLAs? If so, running your application in a StatefulSet provides compelling benefits from the perspective of automation. If the node on which your storage application is running fails, the Pod containing the application can be rescheduled onto another node, and, as it’s using network attached storage media, its data are still available after it’s rescheduled.

Do you need to scale your storage application?

What is the benefit you hope to gain by running your application in a StatefulSet? Do you have a single instance of your storage application for your entire organization? Is scaling your storage application a problem that you actually have? If you have a few instances of your storage application, and they are successfully meeting the demands of your organization, and those demands are not rapidly increasing, you’re already at a local optimum.

If, however, you have an ecosystem of microservices, or if you frequently stamp out new service footprints that include storage applications, then you might benefit from automation and consolidation. If you’re already using Kubernetes to manage the stateless tiers of your ecosystem, you should consider using the same infrastructure to manage your storage applications.

How important is predictable performance?

Kubernetes doesn’t yet support isolation for network or storage I/O across containers. Colocating your storage application with a noisy neighbor can reduce the QPS that your application can handle. You can mitigate this by scheduling the Pod containing your storage application as the only tenant on a node (thus providing it a dedicated machine) or by using Pod anti-affinity rules to segregate Pods that contend for network or disk, but this means that you have to actively identify and mitigate hot spots.

If squeezing the absolute maximum QPS out of your storage application isn’t your primary concern, if you’re willing and able to mitigate hotspots to ensure your storage applications meet their SLAs, and if the ease of turning up new "footprints" (services or collections of services), scaling them, and flexibly re-allocating resources is your primary concern, Kubernetes and StatefulSet might be the right solution to address it.

Does your application require specialized hardware or instance types?

If you run your storage application on high-end hardware or extra-large instance sizes, and your other workloads on commodity hardware or smaller, less expensive images, you may not want to deploy a heterogenous cluster. If you can standardize on a single instance size for all types of apps, then you may benefit from the flexible resource reallocation and consolidation, that you get from Kubernetes.

A Practical Example - ZooKeeper

ZooKeeper is an interesting use case for StatefulSet for two reasons. First, it demonstrates that StatefulSet can be used to run a distributed, strongly consistent storage application on Kubernetes. Second, it's a prerequisite for running workloads like Apache Hadoop and Apache Kakfa on Kubernetes. An in-depth tutorial on deploying a ZooKeeper ensemble on Kubernetes is available in the Kubernetes documentation, and we’ll outline a few of the key features below.

Creating a ZooKeeper EnsembleCreating an ensemble is as simple as using kubectl create to generate the objects stored in the manifest.service "zk-headless" created configmap "zk-config" created poddisruptionbudget "zk-budget" created statefulset "zk" created |

When you create the manifest, the StatefulSet controller creates each Pod, with respect to its ordinal, and waits for each to be Running and Ready prior to creating its successor.

$ kubectl get -w -l app=zk NAME READY STATUS RESTARTS AGE zk-0 0/1 Pending 0 0s zk-0 0/1 Pending 0 0s zk-0 0/1 Pending 0 7s zk-0 0/1 ContainerCreating 0 7s zk-0 0/1 Running 0 38s zk-0 1/1 Running 0 58s zk-1 0/1 Pending 0 1s zk-1 0/1 Pending 0 1s zk-1 0/1 ContainerCreating 0 1s zk-1 0/1 Running 0 33s zk-1 1/1 Running 0 51s zk-2 0/1 Pending 0 0s zk-2 0/1 Pending 0 0s zk-2 0/1 ContainerCreating 0 0s zk-2 0/1 Running 0 25s zk-2 1/1 Running 0 40s |

Examining the hostnames of each Pod in the StatefulSet, you can see that the Pods’ hostnames also contain the Pods’ ordinals.

$ for i in 0 1 2; do kubectl exec zk-$i -- hostname; done zk-0 zk-1 zk-2 |

ZooKeeper stores the unique identifier of each server in a file called “myid”. The identifiers used for ZooKeeper servers are just natural numbers. For the servers in the ensemble, the “myid” files are populated by adding one to the ordinal extracted from the Pods’ hostnames.$ for i in 0 1 2; do echo "myid zk-$i";kubectl exec zk-$i -- cat /var/lib/zookeeper/data/myid; done myid zk-0 1 myid zk-1 2 myid zk-2 3 |

Each Pod has a unique network address based on its hostname and the network domain controlled by the zk-headless Headless Service.

$ for i in 0 1 2; do kubectl exec zk-$i -- hostname -f; done zk-0.zk-headless.default.svc.cluster.local zk-1.zk-headless.default.svc.cluster.local zk-2.zk-headless.default.svc.cluster.local |

$ kubectl exec zk-0 -- cat /opt/zookeeper/conf/zoo.cfg clientPort=2181 dataDir=/var/lib/zookeeper/data dataLogDir=/var/lib/zookeeper/log tickTime=2000 initLimit=10 syncLimit=2000 maxClientCnxns=60 minSessionTimeout= 4000 maxSessionTimeout= 40000 autopurge.snapRetainCount=3 autopurge.purgeInteval=1 server.1=zk-0.zk-headless.default.svc.cluster.local:2888:3888 server.2=zk-1.zk-headless.default.svc.cluster.local:2888:3888 server.3=zk-2.zk-headless.default.svc.cluster.local:2888:3888 |

StatefulSet lets you deploy ZooKeeper in a consistent and reproducible way. You won’t create more than one server with the same id, the servers can find each other via a stable network addresses, and they can perform leader election and replicate writes because the ensemble has consistent membership.

The simplest way to verify that the ensemble works is to write a value to one server and to read it from another. You can use the “zkCli.sh” script that ships with the ZooKeeper distribution, to create a ZNode containing some data.

$ kubectl exec zk-0 zkCli.sh create /hello world ...

WATCHER::

WatchedEvent state:SyncConnected type:None path:null Created /hello |

You can use the same script to read the data from another server in the ensemble.

$ kubectl exec zk-1 zkCli.sh get /hello ...

WATCHER::

WatchedEvent state:SyncConnected type:None path:null world ... |

You can take the ensemble down by deleting the zk StatefulSet.

$ kubectl delete statefulset zk statefulset "zk" deleted |

The cascading delete destroys each Pod in the StatefulSet, with respect to the reverse order of the Pods’ ordinals, and it waits for each to terminate completely before terminating its predecessor.

$ kubectl get pods -w -l app=zk NAME READY STATUS RESTARTS AGE zk-0 1/1 Running 0 14m zk-1 1/1 Running 0 13m zk-2 1/1 Running 0 12m NAME READY STATUS RESTARTS AGE zk-2 1/1 Terminating 0 12m zk-1 1/1 Terminating 0 13m zk-0 1/1 Terminating 0 14m zk-2 0/1 Terminating 0 13m zk-2 0/1 Terminating 0 13m zk-2 0/1 Terminating 0 13m zk-1 0/1 Terminating 0 14m zk-1 0/1 Terminating 0 14m zk-1 0/1 Terminating 0 14m zk-0 0/1 Terminating 0 15m zk-0 0/1 Terminating 0 15m zk-0 0/1 Terminating 0 15m |

You can use kubectl apply to recreate the zk StatefulSet and redeploy the ensemble.

service "zk-headless" configured configmap "zk-config" configured statefulset "zk" created |

If you use the “zkCli.sh” script to get the value entered prior to deleting the StatefulSet, you will find that the ensemble still serves the data.

$ kubectl exec zk-2 zkCli.sh get /hello ...

WATCHER::

WatchedEvent state:SyncConnected type:None path:null world ... |

StatefulSet ensures that, even if all Pods in the StatefulSet are destroyed, when they are rescheduled, the ZooKeeper ensemble can elect a new leader and continue to serve requests.

Tolerating Node Failures

ZooKeeper replicates its state machine to different servers in the ensemble for the explicit purpose of tolerating node failure. By default, the Kubernetes Scheduler could deploy more than one Pod in the zk StatefulSet to the same node. If the zk-0 and zk-1 Pods were deployed on the same node, and that node failed, the ZooKeeper ensemble couldn’t form a quorum to commit writes, and the ZooKeeper service would experience an outage until one of the Pods could be rescheduled.

You should always provision headroom capacity for critical processes in your cluster, and if you do, in this instance, the Kubernetes Scheduler will reschedule the Pods on another node and the outage will be brief.

If the SLAs for your service preclude even brief outages due to a single node failure, you should use a PodAntiAffinity annotation. The manifest used to create the ensemble contains such an annotation, and it tells the Kubernetes Scheduler to not place more than one Pod from the zk StatefulSet on the same node.

Tolerating Planned Maintenance

The manifest used to create the ZooKeeper ensemble also creates a PodDistruptionBudget, zk-budget. The zk-budget informs Kubernetes about the upper limit of disruptions (unhealthy Pods) that the service can tolerate.

{ "podAntiAffinity": { "requiredDuringSchedulingRequiredDuringExecution": [{ "labelSelector": { "matchExpressions": [{ "key": "app", "operator": "In", "values": ["zk-headless"] }] }, "topologyKey": "kubernetes.io/hostname" }] } } } |

$ kubectl get poddisruptionbudget zk-budget NAME MIN-AVAILABLE ALLOWED-DISRUPTIONS AGE zk-budget 2 1 2h |

zk-budget indicates that at least two members of the ensemble must be available at all times for the ensemble to be healthy. If you attempt to drain a node prior taking it offline, and if draining it would terminate a Pod that violates the budget, the drain operation will fail. If you use kubectl drain, in conjunction with PodDisruptionBudgets, to cordon your nodes and to evict all Pods prior to maintenance or decommissioning, you can ensure that the procedure won’t be disruptive to your stateful applications.

Looking Forward

As the Kubernetes development looks towards GA, we are looking at a long list of suggestions from users. If you want to dive into our backlog, checkout the

GitHub issues with the stateful label. However, as the resulting API would be hard to comprehend, we don't expect to implement all of these feature requests. Some feature requests, like support for rolling updates, better integration with node upgrades, and using fast local storage, would benefit most types of stateful applications, and we expect to prioritize these. The intention of StatefulSet is to be able to run a large number of applications well, and not to be able to run all applications perfectly. With this in mind, we avoided implementing StatefulSets in a way that relied on hidden mechanisms or inaccessible features. Anyone can write a controller that works similarly to StatefulSets. We call this "making it forkable."

Over the next year, we expect many popular storage applications to each have their own community-supported, dedicated controllers or "

operators". We've already heard of work on custom controllers for etcd, Redis, and ZooKeeper. We expect to write some more ourselves and to support the community in developing others.

The Operators for

etcd and

Prometheus from CoreOS, demonstrate an approach to running stateful applications on Kubernetes that provides a level of automation and integration beyond that which is possible with StatefulSet alone. On the other hand, using a generic controller like StatefulSet or Deployment means that a wide range of applications can be managed by understanding a single config object. We think Kubernetes users will appreciate having the choice of these two approaches.

--Kenneth Owens & Eric Tune, Software Engineers, Google

↧

↧

December 21, 2016, 3:30 pm

Editor’s note: this post is part of a series of in-depth articles on what's new in Kubernetes 1.5

Extending on the theme of giving users choice, Kubernetes 1.5 release includes the support for Windows Servers. WIth more than 80% of enterprise apps running Java on Linux or .Net on Windows, Kubernetes is previewing capabilities that extends its reach to the mass majority of enterprise workloads.

The new Kubernetes Windows Server 2016 and Windows Container support includes public preview with the following features:- Containerized Multiplatform Applications - Applications developed in operating system neutral languages like Go and .NET Core were previously impossible to orchestrate between Linux and Windows. Now, with support for Windows Server 2016 in Kubernetes, such applications can be deployed on both Windows Server as well as Linux, giving the developer choice of the operating system runtime. This capability has been desired by customers for almost two decades.

- Support for Both Windows Server Containers and Hyper-V Containers - There are two types of containers in Windows Server 2016. Windows Containers is similar to Docker containers on Linux, and uses kernel sharing. The other, called Hyper-V Containers, is more lightweight than a virtual machine while at the same time offering greater isolation, its own copy of the kernel, and direct memory assignment. Kubernetes can orchestrate both these types of containers.

- Expanded Ecosystem of Applications - One of the key drivers of introducing Windows Server support in Kubernetes is to expand the ecosystem of applications supported by Kubernetes: IIS, .NET, Windows Services, ASP.NET, .NET Core, are some of the application types that can now be orchestrated by Kubernetes, running inside a container on Windows Server.

- Coverage for Heterogeneous Data Centers - Organizations already use Kubernetes to host tens of thousands of application instances across Global 2000 and Fortune 500. This will allow them to expand Kubernetes to the large footprint of Windows Server.

The process to bring Windows Server to Kubernetes has been a truly multi-vendor effort and championed by the Windows Special Interest Group (SIG) - Apprenda, Google, Red Hat and Microsoft were all involved in bringing Kubernetes to Windows Server. On the community effort to bring Kubernetes to Windows Server, Taylor Brown, Principal Program Manager at Microsoft stated that “This new Kubernetes community work furthers Windows Server container support options for popular orchestrators, reinforcing Microsoft’s commitment to choice and flexibility for both Windows and Linux ecosystems.”

Guidance for Current UsageWhere to use Windows Server support? | Right now organizations should start testing Kubernetes on Windows Server and provide feedback. Most organizations take months to set up hardened production environments and general availability should be available in next few releases of Kubernetes. |

What works? | Most of the Kubernetes constructs, such as Pods, Services, Labels, etc. work with Windows Containers. |

What doesn’t work yet? | Pod abstraction is not same due to networking namespaces. Net result is that Windows containers in a single POD cannot communicate over localhost. Linux containers can share networking stack by placing them in the same network namespace. DNS capabilities are not fully implemented UDP is not supported inside a container

|

When will it be ready for all production workloads (general availability)? | The goal is to refine the networking and other areas that need work to get Kubernetes users a production version of Windows Server 2016 - including with Windows Nano Server and Windows Server Core installation options - support in the next couple releases. |

Technical Demo

Roadmap

Support for Windows Server-based containers is in alpha release mode for Kubernetes 1.5, but the community is not stopping there. Customers want enterprise hardened container scheduling and management for their entire tech portfolio. That has to include full parity of features among Linux and Windows Server in production. The Windows Server SIG will deliver that parity within the next one or two releases of Kubernetes through a few key areas of investment:- Networking - the SIG will continue working side by side with Microsoft to enhance the networking backbone of Windows Server Containers, specifically around lighting up container mode networking and native network overlay support for container endpoints.

- OOBE - Improving the setup, deployment, and diagnostics for a Windows Server node, including the ability to deploy to any cloud (Azure, AWS, GCP)

- Runtime Operations - the SIG will play a key part in defining the monitoring interface of the Container Runtime Interface (CRI), leveraging it to provide deep insight and monitoring for Windows Server-based containers

Get Started

To get started with Kubernetes on Windows Server 2016, please visit the GitHub guide for more details.If you want to help with Windows Server support, then please connect with the Windows Server SIG or connect directly with Michael Michael, the SIG lead, on GitHub.

--Michael Michael, Senior Director of Product Management, Apprenda

![]() |

| Kubernetes on Windows Server 2016 Architecture |

↧

December 22, 2016, 3:00 pm

Editor’s note: this post is part of a series of in-depth articles on what's new in Kubernetes 1.5

In the latest Kubernetes 1.5 release, you’ll notice that support for Cluster Federation is maturing. That functionality was introduced in Kubernetes 1.3, and the 1.5 release includes a number of new features, including an easier setup experience and a step closer to supporting all Kubernetes API objects.

A new command line tool called ‘kubefed’ was introduced to make getting started with Cluster Federation much simpler. Also, alpha level support was added for Federated DaemonSets, Deployments and ConfigMaps. In summary:- DaemonSets are Kubernetes deployment rules that guarantee that a given pod is always present at every node, as new nodes are added to the cluster (more info).

- Deployments describe the desired state of Replica Sets (more info).

- ConfigMaps are variables applied to Replica Sets (which greatly improves image reusability as their parameters can be externalized - more info).

Federated DaemonSets, Federated Deployments, Federated ConfigMaps take the qualities of the base concepts to the next level. For instance, Federated DaemonSets guarantee that a pod is deployed on every node of the newly added cluster.

But what actually is “federation”? Let’s explain it by what needs it satisfies. Imagine a service that operates globally. Naturally, all its users expect to get the same quality of service, whether they are located in Asia, Europe, or the US. What this means is that the service must respond equally fast to requests at each location. This sounds simple, but there’s lots of logic involved behind the scenes. This is what Kubernetes Cluster Federation aims to do.

How does it work? One of the Kubernetes clusters must become a master by running a Federation Control Plane. In practice, this is a controller that monitors the health of other clusters, and provides a single entry point for administration. The entry point behaves like a typical Kubernetes cluster. It allows creating Replica Sets, Deployments, Services, but the federated control plane passes the resources to underlying clusters. This means that if we request the federation control plane to create a Replica Set with 1,000 replicas, it will spread the request across all underlying clusters. If we have 5 clusters, then by default each will get its share of 200 replicas.

This on its own is a powerful mechanism. But there’s more. It’s also possible to create a Federated Ingress. Effectively, this is a global application-layer load balancer. Thanks to an understanding of the application layer, it allows load balancing to be “smarter” -- for instance, by taking into account the geographical location of clients and servers, and routing the traffic between them in an optimal way.

In summary, with Kubernetes Cluster Federation, we can facilitate administration of all the clusters (single access point), but also optimize global content delivery around the globe. In the following sections, we will show how it works.

Creating a Federation Plane

In this exercise, we will federate a few clusters. For convenience, all commands have been grouped into 6 scripts available here:- 0-settings.sh

- 1-create.sh

- 2-getcredentials.sh

- 3-initfed.sh

- 4-joinfed.sh

- 5-destroy.sh

First we need to define several variables (0-settings.sh)$ cat 0-settings.sh && . 0-settings.sh # this project create 3 clusters in 3 zones. FED_HOST_CLUSTER points to the one, which will be used to deploy federation control plane export FED_HOST_CLUSTER=us-east1-b

# Google Cloud project name export FED_PROJECT=<YOUR PROJECT e.g. company-project>

# DNS suffix for this federation. Federated Service DNS names are published with this suffix. This must be a real domain name that you control and is programmable by one of the DNS providers (Google Cloud DNS or AWS Route53) export FED_DNS_ZONE=<YOUR DNS SUFFIX e.g. example.com> |

And get kubectl and kubefed binaries. (for installation instructions refer to guides here and here).Now the setup is ready to create a few Google Container Engine (GKE) clusters with gcloud container clusters create (1-create.sh). In this case one is in US, one in Europe and one in Asia.$ cat 1-create.sh && . 1-create.sh gcloud container clusters create gce-us-east1-b --project=${FED_PROJECT} --zone=us-east1-b --scopes cloud-platform,storage-ro,logging-write,monitoring-write,service-control,service-management,https://www.googleapis.com/auth/ndev.clouddns.readwrite

gcloud container clusters create gce-europe-west1-b --project=${FED_PROJECT} --zone=europe-west1-b --scopes cloud-platform,storage-ro,logging-write,monitoring-write,service-control,service-management,https://www.googleapis.com/auth/ndev.clouddns.readwrite

gcloud container clusters create gce-asia-east1-a --project=${FED_PROJECT} --zone=asia-east1-a --scopes cloud-platform,storage-ro,logging-write,monitoring-write,service-control,service-management,https://www.googleapis.com/auth/ndev.clouddns.readwrite |

The next step is fetching kubectl configuration with gcloud -q container clusters get-credentials (2-getcredentials.sh). The configurations will be used to indicate the current context for kubectl commands.$ cat 2-getcredentials.sh && . 2-getcredentials.sh gcloud -q container clusters get-credentials gce-us-east1-b --zone=us-east1-b --project=${FED_PROJECT}

gcloud -q container clusters get-credentials gce-europe-west1-b --zone=europe-west1-b --project=${FED_PROJECT}

gcloud -q container clusters get-credentials gce-asia-east1-a --zone=asia-east1-a --project=${FED_PROJECT} |

Let’s verify the setup:$ kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * gke_container-solutions_europe-west1-b_gce-europe-west1-b gke_container-solutions_europe-west1-b_gce-europe-west1-b gke_container-solutions_europe-west1-b_gce-europe-west1-b gke_container-solutions_us-east1-b_gce-us-east1-b gke_container-solutions_us-east1-b_gce-us-east1-b gke_container-solutions_us-east1-b_gce-us-east1-b gke_container-solutions_asia-east1-a_gce-asia-east1-a gke_container-solutions_asia-east1-a_gce-asia-east1-a gke_container-solutions_asia-east1-a_gce-asia-east1-a |

We have 3 clusters. One, indicated by the FED_HOST_CLUSTER environment variable, will be used to run the federation plane. For this, we will use the kubefed init federation command (3-initfed.sh).$ cat 3-initfed.sh && . 3-initfed.sh kubefed init federation --host-cluster-context=gke_${FED_PROJECT}_${FED_HOST_CLUSTER}_gce-${FED_HOST_CLUSTER} --dns-zone-name=${FED_DNS_ZONE} |

You will notice that after executing the above command, a new kubectl context has appeared:$ kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE ... federation federation |

The federation context will become our administration entry point. Now it’s time to join clusters (4-joinfed.sh):